You might have noticed a warning at the top of the release notes for SQL Server 2016 SP2 CU 16:

Note: After you apply CU 16 for SQL Server 2016 SP2, you might encounter an issue in which DML (insert/update/delete) queries that use parallel plans cannot complete any execution and encounter HP_SPOOL_BARRIER waits. You can use the trace flag 13116 or MAXDOP=1 hint to work around this issue. This issue is related to the introduction of fix for 13685819 and it will be fixed in the next Cumulative Update.

That warning links to bug reference 13685819 on the same page. There isn’t a separate KB article, only the description:

Fixes an issue with insert query in SQL Server 2016 that reads the data from the same table and uses a parallel execution plan may produce duplicate rows

The Bug

A more accurate description of the issue would be:

This bug can cause wrong results, incorrect error messages, and statement failure when:

- A data-modification statement requires Halloween Protection.

- That protection is provided by a Parallel Eager Spool.

- The spool is on the probe side of a Batch Mode Hash Join.

This issue affects Azure SQL Database and SQL Server 2014 to 2019 inclusive. Tested 23 March 2021 on:

- Microsoft SQL Server 2014 SP3 CU4 GDR – 12.0.6433.1

- Microsoft SQL Server 2016 SP2 CU16 – 13.0.5882.1

- Microsoft SQL Server 2017 RTM CU23 – 14.0.3381.3

- Microsoft SQL Server 2019 RTM CU9 – 15.0.4102.2

- Azure SQL Database – Standard S6

It is likely reproducible on SQL Server 2012 as well, but the restrictions in that early release of batch mode processing makes that more difficult.

The initial fix — only available in SQL Server 2016 SP2 CU16 — has the unfortunate side-effect of causing some parallel plans to ‘freeze’ and never complete. The fix can be disabled with global or start-up trace flag 13116. The trace flag is not effective at the session or query hint level.

All other versions of SQL Server and Azure SQL Database are vulnerable to the original incorrect-results problem.

About Halloween Protection

If you need a refresher on Halloween Protection, see my series. Briefly, Halloween Protection is required when the reading and writing sides of a data-changing statement might interact to cause incorrect results. Halloween Protection may be required for INSERT, UPDATE, DELETE, and MERGE statements, in different circumstances.

The simplest form of the required phase separation between the reading and writing sides of a statement is an Eager Table Spool. The idea is to read all rows from the reading side of the plan into a spool, before the first data modification is made.

Setup

The following script creates a heap table with 250,000 rows containing bigint numbers from 1 to 250,000:

CREATE TABLE dbo.Test (n bigint NOT NULL);

INSERT dbo.Test

WITH (TABLOCK)

(n)

SELECT TOP (250 * 1000)

n = ROW_NUMBER() OVER (ORDER BY @@SPID)

FROM sys.columns AS C1

CROSS JOIN sys.columns AS C2

CROSS JOIN sys.columns AS C3

ORDER BY

n ASC;

For SQL Server versions without batch mode on rowstore processing, we will also need the usual workaround table:

CREATE TABLE #BatchMe (dummy bit NULL);

CREATE CLUSTERED COLUMNSTORE INDEX c ON #BatchMe;

Demo

The demo inserts new rows into the test table using itself as a source:

INSERT dbo.Test

(n)

SELECT

T2.n + (OneTwo.v * 250 * 1000)

FROM

(

SELECT 1

UNION ALL

SELECT 2

) AS OneTwo (v)

JOIN dbo.Test AS T2

ON T2.n % 1 = OneTwo.v % 1

--LEFT JOIN #BatchMe AS BM

-- ON 0 = 1

The query uses a derived table with two rows to drive inserting two copies of the rows currently in the table. The join condition boils down to 0 = 0 (true for all rows). This is used to allow a hash join, which requires an equality predicate. The calculation in the SELECT clause ensures that each new row gets a unique number.

Uncomment the LEFT JOIN if you need the #BatchMe table to work around the lack of batch mode on rowstore.

Plan Shape

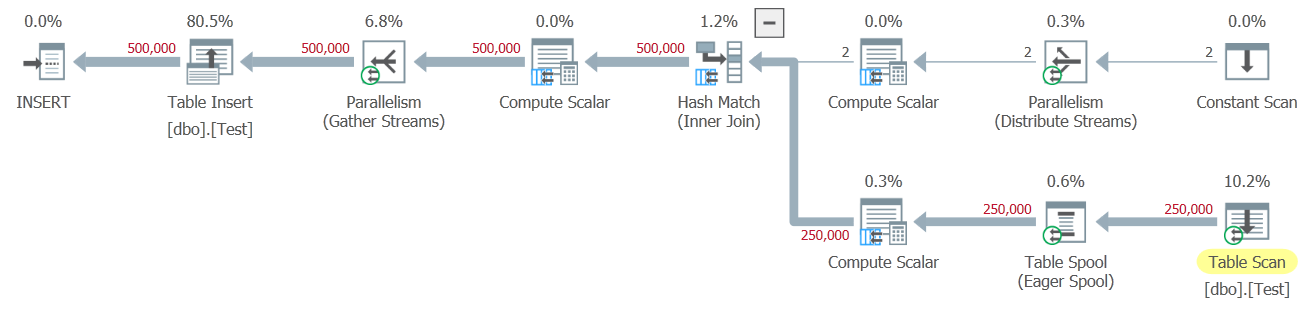

The desired estimated plan shape is:

Two rows from the constant scan join with the 250,000 rows from the heap to produce 500,000 new rows. A unique value for n is computed, then inserted. The essential features of the plan are:

- The hash join runs in batch mode.

- There is an eager table spool on the probe side of that join.

- The spool uses parallelism.

Ensuring the cost threshold for parallelism is low enough (e.g. the default of 5) ought to be enough to get a parallel plan. If not, use trace flag 8649 or the undocumented hint OPTION (USE HINT ('ENABLE_PARALLEL_PLAN_PREFERENCE')) to force a parallel plan.

Actual plan

Running the INSERT statement will likely produce a plan with runtime statistics like the following:

Remember the table has exactly 250,000 rows. Yet the plan shows SQL Server read 250,038 rows from it. This results in 500,076 new rows being added to the table, after the row count is doubled by the join. This is an incorrect result.

You can see the extra 38 rows (doubled up to 76) with a query like:

SELECT T.n, cnt = COUNT_BIG(*)

FROM dbo.Test AS T

GROUP BY T.n

HAVING COUNT_BIG(*) > 1 OR T.n > 750 * 1000

ORDER BY T.n;

I saw one set of 38 rows duplicating values of n in the range 516705 to 516742 inclusive. The second set of 38 rows resulted in values of n beyond the expected 750,000 — specifically values 766705 to 766742 inclusive.

The Bug

So what went wrong in this plan? How did SQL Server manage to read 38 more rows from a table than it had in total?

The eager table spool is supposed to provide full phase separation between the reading and writing sides of the statement. It fails to do that on this occasion, partly because the spool is executed using parallelism.

Multiple threads cooperate to fully scan the test table once. Each thread writes to its own copy of the eager table spool. The threads do not synchronize when their reads complete. This can result in one or more threads continuing to read while others have moved on to the writing phase.

Imagine the statement running at degree of parallelism (DOP) two for ease of discussion. One thread (T1) may complete reading its share of pages from the test table before thread T2 does. Some rows from T1 can make it through the hash join to the insert before T2 completes reading. Those newly-inserted rows can then be read by thread T2 — and ultimately inserted a second time.

The role of batch mode

This is not a problem in row mode parallel plans because eager spools complete their reads during their Open phase. All threads opening their copy of an eager spool synchronize after Open at an exchange (parallelism operator). This guarantees that all threads have completed their reads before any of the threads start reading from the spool (GetRow) to drive the writing side of the plan.

Batch mode processing breaks this guarantee because batch mode operators replace the exchange synchronization with their own implementation. To be clear, batch mode operators do synchronize themselves correctly using ‘sync points’, but these do not provide the behaviour the row-mode eager spool needs.

When a parallel eager spool appears on the probe side of a batch mode hash join, it does not synchronize correctly, so incorrect results are possible.

The fix (updated for CU17)

The initial fix (only available in SQL Server 2016 SP2 CU16) attempted to work around this issue by adding explicit synchronization for a parallel eager spool in the problematic circumstances. Each eager spool thread that completes its read phase waits on HP_SPOOL_BARRIER until all spool threads have completed their reads. Unfortunately, this can lead to a situation where the HP barrier wait never ends, resulting in a permanently stuck statement.

With luck, an improved fix will be released for all affected versions as soon as a reliable one can be found. Such a fix would have to work for parallel Halloween Protection spools, while also not causing other regressions, including unacceptable general performance impacts.

The initial fix was reverted in SQL Server 2016 SP2 CU17. The description there is misleading:

Fixes an issue with insert query in SQL Server 2016 that reads the data from the same table and uses a parallel execution plan may produce duplicate rows.

The wrong-results bug is not fixed. CU17 only reverts the initial attempt at a fix, so HP_SPOOL_BARRIER waits no longer exist. This only addresses the freezing side-effect of the CU16 changes. The issue remains the same in SQL Server 2019 CU18 (not fixed).

In the meantime, the only reliable work around is to avoid one of the components that causes the issue: batch mode processing, or a parallel eager spool used for Halloween Protection. The big hammer there is MAXDOP 1 of course.

Not Limited to Heaps

I chose a demo with a heap table because it has the fewest edge cases. The same issue can occur with clustered tables as well:

IF OBJECT_ID(N'dbo.Test', N'U') IS NOT NULL

DROP TABLE dbo.Test;

CREATE TABLE dbo.Test (n bigint NOT NULL);

INSERT dbo.Test

WITH (TABLOCK)

(n)

SELECT TOP (250 * 1000)

n = ROW_NUMBER() OVER (ORDER BY @@SPID)

FROM sys.columns AS C1

CROSS JOIN sys.columns AS C2

CROSS JOIN sys.columns AS C3

ORDER BY

n ASC;

-- NEW! Now a clustered table

CREATE UNIQUE CLUSTERED INDEX i ON dbo.Test (n);

The test insert is modified to include a (redundant) index seek:

INSERT dbo.Test

(n)

SELECT

T2.n + (OneTwo.v * 250 * 1000)

FROM

(

SELECT 1

UNION ALL

SELECT 2

) AS OneTwo (v)

JOIN dbo.Test AS T2

ON T2.n % 1 = OneTwo.v % 1

--LEFT JOIN #BatchMe AS BM

-- ON 0 = 1

WHERE

T2.n > 0 -- just for the seek

The target execution plan shape is:

Depending on your configuration, you might struggle to get this exact plan. A common alternative selected by the optimizer features a parallel batch mode sort after the hash join. This sort can provide full phase separation to prevent the Halloween Problem, so no eager spool is necessary.

The sort is introduced to allow ordered inserts to the b-tree, potentially enabling minimal logging via the fast load context. You can work around that in SQL Server by adding a OPTION (QUERYTRACEON 8795)hint to disable the ordered insert optimization. That trace flag is undocumented and unsupported, so it is not for production use.

If you can achieve the desired plan shape (batch mode hash join above parallel eager spool), executing the statement will likely fail with an error like:

Msg 2601, Level 14, State 1, Line 44

Cannot insert duplicate key row in object ‘dbo.Test’ with unique index ‘i’. The duplicate key value is (620934).

The statement has been terminated.

There are timing issues involved here. For example, to reproduce this on Azure SQL Database, I had to scale up to S8. Below that level, throttled I/O prevented things happening at the pace necessary for the bug to manifest.

Columnstore source

This final example was collected from a test run with the test table configured as a clustered columnstore, containing 600,000 rows:

On this occasion, the bug caused 999,593 rows to be read from a table with 600,000 rows.

Final Thoughts

I wrote this to provide more information on this issue than is available from official sources at the present time. I cannot claim that the above represents a complete description of the problem. I have not tested much beyond the scripts presented here. You may be able to find additional cases (beyond a batch mode hash join) that can lead to this problem arising.

Until a permanent fix is available, the safest workaround is to avoid parallel eager table spools and batch mode processing where a data-modification statement is vulnerable to the Halloween Problem.

Bug Status

-

This issue is not fixed in SQL Server 2016 SP2 CU17 or SP3.

SP2 CU17 only reverts the fix attempt in CU16 that added

HP_SPOOL_BARRIER, with unfortunate side-effects. The duplicate-rows issue remains.The transition to extended support means a fix in unlikely in the future.

-

The problem is not fixed in SQL Server 2017 CU 31.

The transition to extended support means a fix in unlikely in the future.

-

The bug is fixed in SQL Server 2019 CU21.

-

The issue is fixed in SQL Server 2022 RTM.

If you want to reproduce the bug on SQL Server 2022, enable trace flag 13116 to disable the fix (the same flag was used previously to enable the initial fix, which had problems).

My grateful thanks to Eugene Karpovich, who first alerted me to this issue in July 2020, having experienced this issue in a production system. It was one of the most interesting query plan analysis questions I’ve had in a while.

Paul, thanks again for the detailed description of the issue and for this article, in general. Very helpful.

ReplyDeleteIn regards to working with MS Support, I just got a call from them, and they confirmed that the fix introduced in SQL2016 SP2 CU16 will be removed in the next CU.

At the same time, they also assured that the issue won't slip through the cracks, and their development team is actively working on producing a permanent fix for it.

Will let you know if/when I get any more updates.

Regards

Eugene

Microsoft claim to have fixed this bug in SQL 2016 SP2 CU17 so I am thinking you will write a follow-up article. Perhaps you can include some history of batch mode as I always thought it came out in SQL 2017 for columnstore indexes.

ReplyDeleteThanks

Chris

Hi Chris, no the "fix" in CU17 is to revert the "fix" in CU16, so we're back to incorrect results only, not queries that run forever waiting on HP_SPOOL_BARRIER. I'll likely add a note in the main text soon (I only saw CU17 today).

DeleteBatch mode was first added in SQL Server 2012 with the first implementation of columnstore.

Hi Paul, the 2014 situation is not good because of the support right? Or do you think there is a chance of a new CU fixing this problem?

ReplyDeleteThere's always a chance 🙂

DeleteDo you think that forcing maxdop to 1 is a better solution than adding a hint to disable Batch Mode eg OPTION(USE HINT('DISALLOW_BATCH_MODE'))

ReplyDeleteChris

Well it depends. Both will 'work' to avoid the bug. I would expect batch mode to be more important for performance in a lot of cases.

DeleteA fix doesn't appear to have made it into SQL 2019 CU11

DeleteI take it this is still not fixed in the latest SQL 2019 CU?

ReplyDeleteStill unfixed as of SQL Server 2019 CU 15 (build 15.0.4198)

ReplyDelete