One of the transformations available to the SQL Server query optimizer is pulling a logical Group By (and any associated aggregates) above a Join.

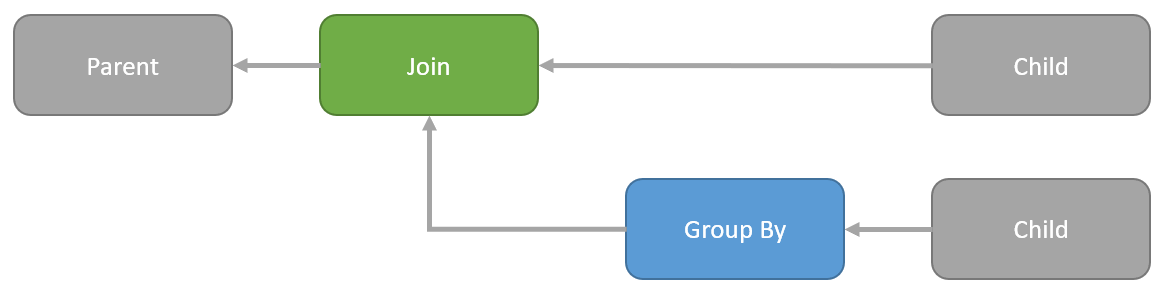

Visually, this means transforming a tree of logical operations from:

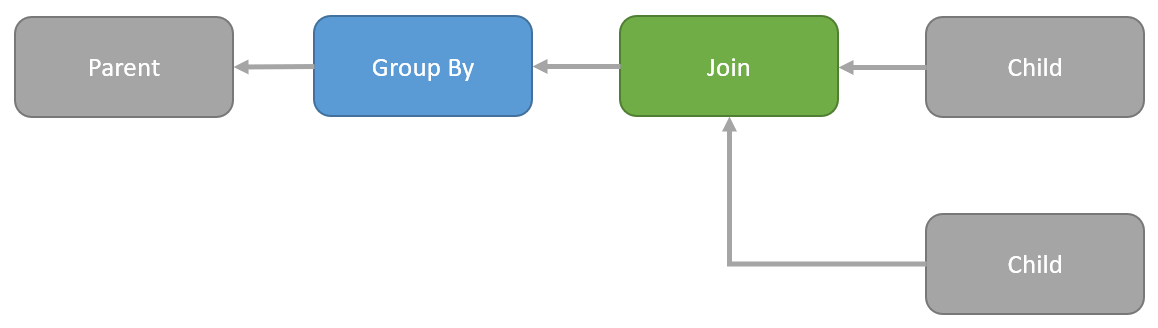

…to this:

The above diagrams are logical representations. They need to be implemented as physical operators to appear in an execution plan. The options are:

- Group By

- Hash Match Aggregate

- Stream Aggregate

- Distinct Sort

- Join

- Nested Loops Join

- Nested Loops Apply

- Hash Match Join

- Merge Join

When the optimizer moves a Group By above a Join it has to preserve the semantics. The new sequence of operations must be guaranteed to return the same results as the original in all possible circumstances.

One cannot just pick up a Group By and arbitrarily move it around the query tree without risking incorrect results.