Page Free Space

SQL Server internals by Paul White

About This Blog

Tuesday, 1 April 2025

My Content has Moved!

Tuesday, 17 September 2024

Why a Self-Join Requires Halloween Protection

This article was originally published on 𝕏.

I was asked recently why Halloween Protection was needed for data modification statements that include a self-join of the target table. This gives me a chance to explain, while also covering some interesting product bug history from the SQL Server 7 and 2000 days.

If you already know all there is to know about the Halloween Problem as it applies to SQL Server, you can skip the background section.

Sunday, 15 September 2024

Current State of the ANY Aggregate Transformation

This article was originally published on 𝕏.

SQL Server provides a way to select any one row from a group of rows, provided you write the statement using a specific syntax. This method returns any one row from each group, not the minimum, maximum or anything else. In principle, the one row chosen from each group is unpredictable.

The general idea of the required syntax is to logically number rows starting with 1 in each group in no particular order, then return only the rows numbered 1. The outer statement must not select the numbering column for this query optimizer transformation (SelSeqPrjToAnyAgg) to work.

Friday, 13 September 2024

A Small Sample of SQL Server Chaos

This article was originally published on 𝕏.

Background

Since SQL Server indexed views don’t allow MIN or MAX aggregates, I recently found myself writing a trigger instead. The trigger’s job was to keep a summary table in sync with a source query (which featured a MAX aggregate).

There’s a cost to running a trigger after every insert, update, or delete (with up to three trigger invocations per merge statement) but fast access to the summary data was worth it in this case. Though a trigger is a bit more expensive than the inline materialised view maintenance automatically added to the source statement’s execution plan by SQL Server, efficient trigger code and good indexing can help with the performance aspect (as always).

Friday, 30 August 2024

A Nonclustered Index Update Disaster

This article was originally published on 𝕏.

Introduction

Update execution plans are not something the T-SQL statement writer has much control over. You can affect the data reading side of the plan with query rewrites and hints, but there’s not nearly as much tooling available to affect the writing side of the plan.

Update processing can be extremely complex and reading data-changing execution plans correctly can also be difficult. Many important details are hidden away in obscure and poorly documented properties, or simply not present at all.

In this article, I want to you show a particularly bad update plan example. It has value in and of itself, but it will also give me a chance to describe some less well-known SQL Server details and behaviours.

Monday, 12 August 2024

Don't Mix with Datetime

This article was originally published on 𝕏.

Introduction

Microsoft encourages us not to use the datetime data type:

Avoid using datetime for new work. Instead, use the time, date, datetime2, and datetimeoffset data types. These types align with the SQL Standard, and are more portable. time, datetime2 and datetimeoffset provide more seconds precision. datetimeoffset provides time zone support for globally deployed applications.

Well, ok. Sensible and well-informed people might still choose to use datetime for performance reasons. Common date and time functions have optimised implementations in the SQL Server expression service for the datetime and smalldatetime data types.

Thursday, 20 June 2024

SQL Server Parallel Index Builds

|

| Parallel Index Building Execution Plan |

— Paul White (@SQL_Kiwi) June 20, 2024

Friday, 31 May 2024

Impossible Execution Plan Timings

- A batch mode operator reports CPU and elapsed times for that operator alone

- A row mode operator reports times for itself and all its children

Friday, 17 November 2023

Setting a Fixed Size for Transaction Log Virtual Log Files (VLFs)

The documentation has this to say about virtual log file (VLF) sizes:

The SQL Server Database Engine divides each physical log file internally into several virtual log files (VLFs). Virtual log files have no fixed size, and there’s no fixed number of virtual log files for a physical log file. The Database Engine chooses the size of the virtual log files dynamically while it’s creating or extending log files. The Database Engine tries to maintain a few virtual files. The size of the virtual files after a log file has been extended is the sum of the size of the existing log and the size of the new file increment. The size or number of virtual log files can’t be configured or set by administrators.

It then goes on to describe the problems having too many VLFs can cause, and how the database owner can arrange things so a reasonable number of VLFs are created. There’s even a (mostly accurate) formula for the number and size of VLFs SQL Server will create when asked to extend a transaction log file.

This is all very familiar, of course, but it is also dumb. Why on earth should we have to worry about internal formulas? It seems ridiculous to have to provision or grow a transaction log in pieces just to get a reasonable VLF outcome.

Wouldn’t it be better to be able to specify a fixed size for VLFs instead?

Starting with SQL Server 2022, there is now a way though it is undocumented and unsupported for the time being at least.

You can’t use it in a production database and there’s a real risk of it damaging your database beyond repair. Aside from those warnings, there’s no reason not to play around with it in a development environment. Or, if you’re simply curious to know more, read on.

Monday, 13 November 2023

Why Batch Mode Sort Spills Are So Slow

Batch mode sorting was added to SQL Server in the 2016 release under compatibility level 130. Most of the time, a batch mode sort will be much faster than the row mode equivalent.

This post is about an important exception to this rule, as recently reported by Erik Darling (video).

No doubt you’ll visit both links before reading on, but to summarize, the issue is that batch mode sorts are very slow when they spill—much slower than an equivalent row mode sort.

This also seems like a good opportunity to write down some sorting details I haven’t really covered before. If you’re not interested in those details and background to the current issue, you can skip down to the section titled, “Erik’s Demo”.

Friday, 20 October 2023

Fast Key Optimization for Row Mode Sorts

SQL Server row-mode sorts generally use a custom implementation of the well-known merge sort algorithm to order data.

As a comparison-based algorithm, this performs a large number of value comparisons during sorting—usually many more than the number of items to sort.

Although each comparison is typically not expensive, even moderately sized sorting can involve a very large number of comparisons.

SQL Server can be called upon to sort a variety of data types. To facilitate this, the sorting code normally calls out to a specific comparator to determine how two compared values should sort: lower, higher, or equal.

Although calling comparator code has low overhead, performing enough of them can cause noticeable performance differences.

To address this, SQL Server has always (since at least version 7) supported a fast key optimization for simple data types. This optimization performs the comparison using highly optimized inline code rather than calling out to a separate routine.

Wednesday, 2 August 2023

Importing a File in Batches

There are a million ways to import data into SQL Server. Most of the time, we want to ingest the new data as quickly and efficiently possible but that’s not always the case.

Sometimes, we need to accept data at a rate that will not dominate resource usage on the target system or cause excessive transaction log growth. In other cases, each row from the data source needs specific server-side processing to validate and persist the data across multiple relational tables, perhaps involving foreign keys and identity columns.

All this can be achieved with client-side tools and programming. It can also be done server-side by importing the raw data into a staging table before processing using T-SQL procedures.

Other times, the need arises to ingest data without using client-side tools and without making a complete copy of the raw data on the server. This article describes one possible approach in that situation.

Tuesday, 30 August 2022

Reducing Contention on the NESTING_TRANSACTION_FULL latch

Each additional worker thread in a parallel execution plan executes inside a nested transaction associated with the single parent transaction.

Parallel worker access to shared parent transaction structures is protected by a latch. A NESTING_TRANSACTION_READONLY latch is used for a read-only transaction. A NESTING_TRANSACTION_FULL latch is used if the transaction has modified the database.

This design has its roots in SQL Server 7, where read-only query parallelism was introduced. SQL Server 2000 built on this with parallel index builds, which for the first time allowed multiple threads to cooperate to change a persistent database structure. Many improvements have followed since then, but the fundamental parent-child transaction design remains today.

Though lightweight, a latch can become a point of contention when requested sufficiently frequently in incompatible modes by many different threads. Some contention on shared resources is to be expected; it becomes a problem when latch waits start to affect CPU utilisation and throughput.

Saturday, 23 July 2022

More Consistent Execution Plan Timings in SQL Server 2022

The updated showplan schema shipped with SSMS 19 preview 2 contains an interesting comment:

ExclusiveProfileTimeActive: true if the actual elapsed time (ActualElapsedms attribute) and the actual CPU time (ActualCPUms attribute) represent the time interval spent exclusively within the relational iterator.

What does this mean?

Thursday, 18 November 2021

Be Careful with LOBs and OPTION (RECOMPILE)

It sometimes makes sense to add OPTION (RECOMPILE) to a query. Typically this will be when:

- A good enough plan for the query is very sensitive to one or more parameters

- No good single value exists for the parameter to use in a hint

- Optimize for unknown doesn’t give a good result

- The plan might be expected to change over time

- The cost of recompiling the statement is much less than the expected execution time

- Recompiling every time is very likely to save more time and resources than it costs overall

All that is fairly well-known. The point of this short post is to draw your attention to another side-effect of adding OPTION (RECOMPILE) — the parameter embedding optimization (PEO).

Saturday, 5 June 2021

Empty Parallel Zones

An empty parallel zone is an area of the plan bounded by exchanges (or the leaf level) containing no operators.

How and why does SQL Server sometimes generate a parallel plan with an empty parallel zone?

Wednesday, 24 March 2021

Incorrect Results with Parallel Eager Spools and Batch Mode

You might have noticed a warning at the top of the release notes for SQL Server 2016 SP2 CU 16:

Note: After you apply CU 16 for SQL Server 2016 SP2, you might encounter an issue in which DML (insert/update/delete) queries that use parallel plans cannot complete any execution and encounter HP_SPOOL_BARRIER waits. You can use the trace flag 13116 or MAXDOP=1 hint to work around this issue. This issue is related to the introduction of fix for 13685819 and it will be fixed in the next Cumulative Update.

That warning links to bug reference 13685819 on the same page. There isn’t a separate KB article, only the description:

Fixes an issue with insert query in SQL Server 2016 that reads the data from the same table and uses a parallel execution plan may produce duplicate rows

Sunday, 11 October 2020

sql_handle and the SQL Server batch text hash

This article describes the structure of a sql_handle and shows how the batch text hash component is calculated.

Thursday, 8 October 2020

Closest Match with Sort Rewinds

In When Do SQL Server Sorts Rewind? I described how most sorts can only rewind when they contain at most one row. The exception is in-memory sorts, which can rewind at most 500 rows and 16KB of data.

These are certainly tight restrictions, but we can still make use of them on occasion.

To illustrate, I am going reuse a demo Itzik Ben-Gan provided in part one of his Closest Match series, specifically solution 2 (modified value range and indexing).

As Itzik’s title suggests, the task is to find the closest match for a value in one table in a second table.

As Itzik describes it:

The challenge is to match to each row from T1 the row from T2 where the absolute difference between T2.

valand T1.valis the lowest. In case of ties (multiple matching rows in T2), match the top row based onvalascending,keycolascending order.That is, the row with the lowest value in the

valcolumn, and if you still have ties, the row with the lowestkeycolvalue. The tiebreaker is used to guarantee determinism.

Tuesday, 4 August 2020

SQL Server 2019 Aggregate Splitting

The SQL Server 2019 query optimizer has a new trick available to improve the performance of large aggregations. The new exploration abilities are encoded in two new closely-related optimizer rules:

GbAggSplitToRangesSelOnGbAggSplitToRanges

The extended event query_optimizer_batch_mode_agg_split is provided to track when this new optimization is considered. The description of this event is:

Occurs when the query optimizer detects batch mode aggregation is likely to spill and tries to split it into multiple smaller aggregations.

Other than that, this new feature hasn’t been documented yet. This article is intended to help fill that gap.

Sunday, 26 July 2020

A bug with Halloween Protection and the OUTPUT Clause

Background

The OUTPUT clause can be used to return results from an INSERT, UPDATE, DELETE, or MERGE statement. The data can be returned to the client, inserted to a table, or both.

There are two ways to add OUTPUT data to a table:

- Using

OUTPUT INTO - With an outer

INSERTstatement.

For example:

-- Test table

DECLARE @Target table

(

id integer IDENTITY (1, 1) NOT NULL,

c1 integer NULL

);

-- Holds rows from the OUTPUT clause

DECLARE @Output table

(

id integer NOT NULL,

c1 integer NULL

);

Sunday, 5 July 2020

How MAXDOP Really Works

A few days ago I ran a Twitter poll:

The most popular answer gets highlighted by Twitter at the end of the poll, but as with many things on social media, that doesn’t mean it is correct:

Sunday, 31 May 2020

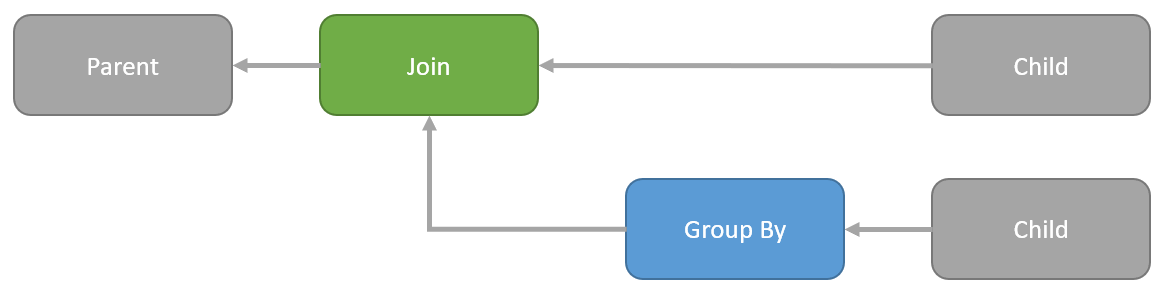

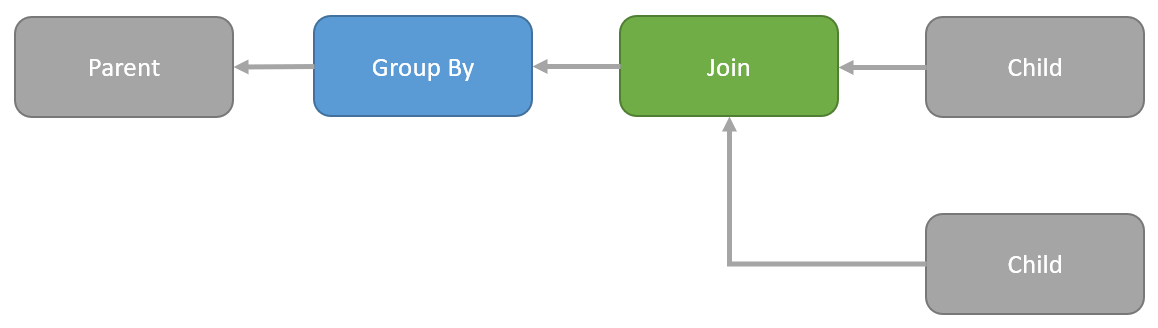

Pulling Group By Above a Join

One of the transformations available to the SQL Server query optimizer is pulling a logical Group By (and any associated aggregates) above a Join.

Visually, this means transforming a tree of logical operations from:

…to this:

The above diagrams are logical representations. They need to be implemented as physical operators to appear in an execution plan. The options are:

- Group By

- Hash Match Aggregate

- Stream Aggregate

- Distinct Sort

- Join

- Nested Loops Join

- Nested Loops Apply

- Hash Match Join

- Merge Join

When the optimizer moves a Group By above a Join it has to preserve the semantics. The new sequence of operations must be guaranteed to return the same results as the original in all possible circumstances.

One cannot just pick up a Group By and arbitrarily move it around the query tree without risking incorrect results.

Saturday, 24 August 2019

Batch Mode Bitmap Demos

This is a companion post to my main article Batch Mode Bitmaps in SQL Server. This post provides demos and illustrations to supplement the technical article.

The scripts presented here were run on SQL Server 2017 CU 16.

Sunday, 9 June 2019

Apply versus Nested Loops Join

SQL is a declarative language. We use SQL to write a logical query specification that defines the results we want. For example, we might write a query using either APPLY or JOIN that logically describes exactly the same results.

It is up to the query optimizer to find an efficient physical implementation of that logical requirement. SQL Server is free to choose any plan it likes, so long as the results are guaranteed to be the same as specified in the original SQL.

The optimizer is capable of transforming an apply to a join and vice versa. It generally tries to rewrite apply to join during initial compilation to maximize the searchable plan space during cost-based optimization. Having transformed an apply to a join early on, it may also consider a transformation back to an apply shape later on to assess the merits of e.g. an index loops join.