This article describes the structure of a sql_handle and shows how the batch text hash component is calculated.

About This Blog

Sunday, 11 October 2020

sql_handle and the SQL Server batch text hash

Thursday, 8 October 2020

Closest Match with Sort Rewinds

In When Do SQL Server Sorts Rewind? I described how most sorts can only rewind when they contain at most one row. The exception is in-memory sorts, which can rewind at most 500 rows and 16KB of data.

These are certainly tight restrictions, but we can still make use of them on occasion.

To illustrate, I am going reuse a demo Itzik Ben-Gan provided in part one of his Closest Match series, specifically solution 2 (modified value range and indexing).

As Itzik’s title suggests, the task is to find the closest match for a value in one table in a second table.

As Itzik describes it:

The challenge is to match to each row from T1 the row from T2 where the absolute difference between T2.

valand T1.valis the lowest. In case of ties (multiple matching rows in T2), match the top row based onvalascending,keycolascending order.That is, the row with the lowest value in the

valcolumn, and if you still have ties, the row with the lowestkeycolvalue. The tiebreaker is used to guarantee determinism.

Tuesday, 4 August 2020

SQL Server 2019 Aggregate Splitting

The SQL Server 2019 query optimizer has a new trick available to improve the performance of large aggregations. The new exploration abilities are encoded in two new closely-related optimizer rules:

GbAggSplitToRangesSelOnGbAggSplitToRanges

The extended event query_optimizer_batch_mode_agg_split is provided to track when this new optimization is considered. The description of this event is:

Occurs when the query optimizer detects batch mode aggregation is likely to spill and tries to split it into multiple smaller aggregations.

Other than that, this new feature hasn’t been documented yet. This article is intended to help fill that gap.

Sunday, 26 July 2020

A bug with Halloween Protection and the OUTPUT Clause

Background

The OUTPUT clause can be used to return results from an INSERT, UPDATE, DELETE, or MERGE statement. The data can be returned to the client, inserted to a table, or both.

There are two ways to add OUTPUT data to a table:

- Using

OUTPUT INTO - With an outer

INSERTstatement.

For example:

-- Test table

DECLARE @Target table

(

id integer IDENTITY (1, 1) NOT NULL,

c1 integer NULL

);

-- Holds rows from the OUTPUT clause

DECLARE @Output table

(

id integer NOT NULL,

c1 integer NULL

);

Sunday, 5 July 2020

How MAXDOP Really Works

A few days ago I ran a Twitter poll:

The most popular answer gets highlighted by Twitter at the end of the poll, but as with many things on social media, that doesn’t mean it is correct:

Sunday, 31 May 2020

Pulling Group By Above a Join

One of the transformations available to the SQL Server query optimizer is pulling a logical Group By (and any associated aggregates) above a Join.

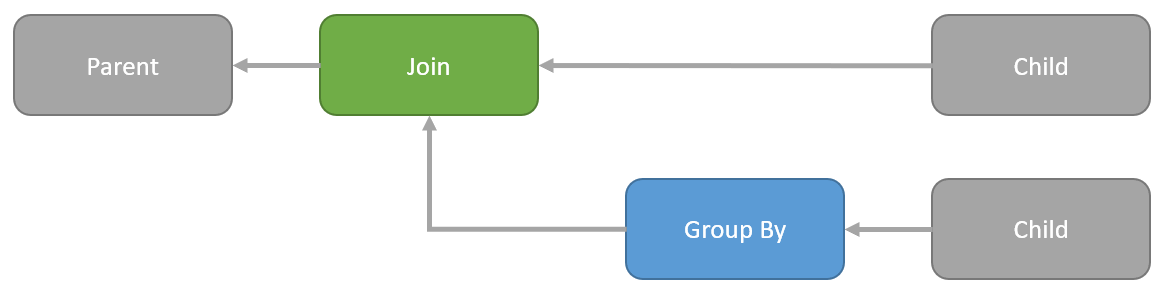

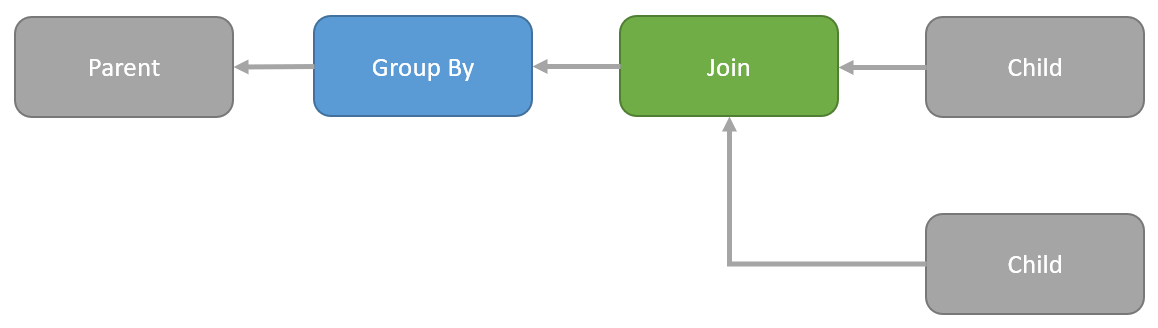

Visually, this means transforming a tree of logical operations from:

…to this:

The above diagrams are logical representations. They need to be implemented as physical operators to appear in an execution plan. The options are:

- Group By

- Hash Match Aggregate

- Stream Aggregate

- Distinct Sort

- Join

- Nested Loops Join

- Nested Loops Apply

- Hash Match Join

- Merge Join

When the optimizer moves a Group By above a Join it has to preserve the semantics. The new sequence of operations must be guaranteed to return the same results as the original in all possible circumstances.

One cannot just pick up a Group By and arbitrarily move it around the query tree without risking incorrect results.

Saturday, 24 August 2019

Batch Mode Bitmap Demos

This is a companion post to my main article Batch Mode Bitmaps in SQL Server. This post provides demos and illustrations to supplement the technical article.

The scripts presented here were run on SQL Server 2017 CU 16.

Sunday, 9 June 2019

Apply versus Nested Loops Join

SQL is a declarative language. We use SQL to write a logical query specification that defines the results we want. For example, we might write a query using either APPLY or JOIN that logically describes exactly the same results.

It is up to the query optimizer to find an efficient physical implementation of that logical requirement. SQL Server is free to choose any plan it likes, so long as the results are guaranteed to be the same as specified in the original SQL.

The optimizer is capable of transforming an apply to a join and vice versa. It generally tries to rewrite apply to join during initial compilation to maximize the searchable plan space during cost-based optimization. Having transformed an apply to a join early on, it may also consider a transformation back to an apply shape later on to assess the merits of e.g. an index loops join.

Tuesday, 2 May 2017

SQL Server Temporary Object Caching

Creating a table is a relatively resource-intensive and time-consuming operation. The server must locate and allocate storage space for the new data and index structures and make the corresponding entries in multiple system metadata tables. All this work has to be done in ways that will always work correctly under high concurrency, and which meet all of the ACID guarantees expected of a relational database.

In SQL Server, this means taking the right kinds of locks and latches, in the correct sequence, while also ensuring that detailed transaction log entries are safely committed to persistent storage in advance of any physical changes to the database. These log entries ensure the system can bring the database back to a consistent state in the event of a transaction rollback or system crash.

Dropping a table is a similarly expensive operation. Luckily, most databases do not create or drop tables with any great frequency. The obvious exception to this is the system database tempdb. This single database contains the physical storage, allocation structures, system metadata, and transaction log entries for all temporary tables and table variables across the entire SQL Server instance.

It is in the nature of temporary tables and table variables to be created and dropped much more frequently than other database object types. When this naturally high frequency of creation and destruction is combined with the concentrating effect of all temporary tables and table variables being associated with a single database, it is hardly surprising that contention can arise in the allocation and metadata structures of the tempdb database.

Friday, 23 January 2015

Allocation Ordered Scans

When an execution plan includes a scan of a b-tree index structure, the storage engine may be able to choose between two physical access strategies when the plan is executed:

- Follow the index b-tree structure; or,

- locate pages using internal page allocation information.

Where a choice is available, the storage engine makes the runtime decision on each execution. A plan recompilation is not required for it to change its mind.

The b-tree strategy starts at the root of the tree, descends to an extreme edge of the leaf level (depending on whether the scan is forward or backward), then follows leaf-level page links until the other end of the index is reached.

The allocation strategy uses Index Allocation Map (IAM) structures to locate database pages allocated to the index. Each IAM page maps allocations to a 4GB interval in a single physical database file, so scanning the IAM chains associated with an index tends to access index pages in physical file order (at least as far as SQL Server can tell).

Tuesday, 15 April 2014

Cardinality Estimation for Disjunctive (OR) Predicates in SQL Server 2014 Onward

Introduction

Back in January 2014, I wrote an article called Cardinality Estimation for Multiple Predicates that described the cardinality estimation process for queries with multiple predicates, from the point of view of the old and new cardinality estimators.

The article describes the various behaviours and formulas involved, along with the usual sprinkling of documented and undocumented trace flags. I described the formula SQL Server 2014 uses to calculate a cardinality estimate for multiple predicates connected with AND (conjunctive predicates), which was already relatively well-known.

Despite some fairly energetic research, and basic-to-intermediate skills with Excel, I was unable to deduce a similar formula for the disjunctive case, where predicates are connected by OR. The trace flag output I describe in the other article clearly showed that exponential backoff was used in the new 2014 cardinality estimator, but the precise formula eluded me.

Saturday, 31 August 2013

Nested Loops Prefetching

Nested loops join query plans can be a lot more interesting (and complicated) than is commonly realized.

One query plan area I get asked about a lot is prefetching. It is not documented in full detail anywhere, so this seems like a good topic to address in a blog post.

The examples used in this article are based on questions asked by Adam Machanic.

Wednesday, 28 August 2013

Parameter Sniffing, Embedding, and the RECOMPILE Options

Parameter Sniffing

Query parameterization promotes the reuse of cached execution plans, thereby avoiding unnecessary compilations, and reducing the number of ad-hoc queries in the plan cache.

These are all good things, provided the query being parameterized really ought to use the same cached execution plan for different parameter values. An execution plan that is efficient for one parameter value may not be a good choice for other possible parameter values.

When parameter sniffing is enabled (the default), SQL Server chooses an execution plan based on the particular parameter values that exist at compilation time. The implicit assumption is that parameterized statements are most commonly executed with the most common parameter values. This sounds reasonable enough (even obvious) and indeed it often works well.

A problem can occur when an automatic recompilation of the cached plan occurs. A recompilation may be triggered for all sorts of reasons, for example because an index used by the cached plan has been dropped (a correctness recompilation) or because statistical information has changed (an optimality recompile).

Whatever the exact cause of the plan recompilation, there is a chance that an atypical value is being passed as a parameter at the time the new plan is generated. This can result in a new cached plan (based on the sniffed atypical parameter value) that is not good for the majority of executions for which it will be reused.

It is not easy to predict when a particular execution plan will be recompiled (for example, because statistics have changed sufficiently) resulting in a situation where a good-quality reusable plan can be suddenly replaced by a quite different plan optimized for atypical parameter values.

One such scenario occurs when the atypical value is highly selective, resulting in a plan optimized for a small number of rows. Such plans will often use single-threaded execution, nested loops joins, and lookups. Serious performance issues can arise when this plan is reused for different parameter values that generate a much larger number of rows.

Wednesday, 21 August 2013

Incorrect Results Caused By Adding an Index

Say you have the following two tables, one partitioned and one not:

CREATE PARTITION FUNCTION PF (integer)

AS RANGE RIGHT

FOR VALUES (1000, 2000, 3000, 4000, 5000);

CREATE PARTITION SCHEME PS

AS PARTITION PF

ALL TO ([PRIMARY]);

-- Partitioned

CREATE TABLE dbo.T1

(

T1ID integer NOT NULL,

SomeID integer NOT NULL,

CONSTRAINT [PK dbo.T1 T1ID]

PRIMARY KEY CLUSTERED (T1ID)

ON PS (T1ID)

);

-- Not partitioned

CREATE TABLE dbo.T2

(

T2ID integer IDENTITY (1,1) NOT NULL,

T1ID integer NOT NULL,

CONSTRAINT [PK dbo.T2 T2ID]

PRIMARY KEY CLUSTERED (T2ID)

ON [PRIMARY]

);

Wednesday, 24 July 2013

Two Partitioning Peculiarities

Table partitioning in SQL Server is essentially a way of making multiple physical tables (row sets) look like a single table. This abstraction is performed entirely by the query processor, a design that makes things simpler for users, but which makes complex demands of the query optimizer.

This post looks at two examples which exceed the optimizer’s abilities in SQL Server 2008 onward.

Thursday, 18 July 2013

Aggregates and Partitioning

The changes in the internal representation of partitioned tables between SQL Server 2005 and SQL Server 2008 resulted in improved query plans and performance in the majority of cases (especially when parallel execution is involved).

Unfortunately, the same changes caused some things that worked well in SQL Server 2005 to suddenly not work so well in SQL Server 2008 and later.

This post looks at a one example where the SQL Server 2005 query optimizer produced a superior execution plan compared with later versions.

Monday, 8 July 2013

Working Around Missed Optimizations

In my last post, we saw how a query featuring a scalar aggregate could be transformed by the optimizer to a more efficient form. As a reminder, here’s the schema again:

Wednesday, 26 June 2013

Optimization Phases and Missed Opportunities

There are two complementary skills that are very useful in query tuning. One is the ability to read and interpret execution plans. The second is knowing a bit about how the query optimizer works to translate SQL text into an execution plan.

Putting the two things together can help us spot times when an expected optimization was not applied, resulting in an execution plan that is not as efficient as it could be.

The lack of documentation around exactly which optimizations SQL Server can apply (and in what circumstances) means that a lot of this comes down to experience, however.

Monday, 17 June 2013

Improving Partitioned Table Join Performance

The query optimizer does not always choose an optimal strategy when joining partitioned tables. This post looks at an example of that, showing how a manual rewrite of the query can almost double performance, while reducing the memory grant to almost nothing.

Tuesday, 11 June 2013

Hello Operator, My Switch Is Bored

This post is in two parts. The first part looks at the Switch execution plan operator. The second part is about an invisible plan operator and cardinality estimates on filtered indexes.

Thursday, 4 April 2013

Optimizer Limitations with Filtered Indexes

One of the filtered index use cases mentioned in the product documentation concerns a column that contains mostly NULL values. The idea is to create a filtered index that excludes the NULLs, resulting in a smaller nonclustered index that requires less maintenance than the equivalent unfiltered index.

Another popular use of filtered indexes is to filter NULLs from a UNIQUE index, giving the behaviour users of other database engines might expect from a default UNIQUE index or constraint: Uniqueness enforced only for non-NULL values.

Unfortunately, the query optimizer has limitations where filtered indexes are concerned. This post looks at a couple of less well-known examples.

Wednesday, 20 March 2013

The Problem with Window Functions and Views

Introduction

Since their introduction in SQL Server 2005, window functions like ROW_NUMBER and RANK have proven to be extremely useful in solving a wide variety of common T-SQL problems. In an attempt to generalize such solutions, database designers often look to incorporate them into views to promote code encapsulation and reuse.

Unfortunately, a limitation in the SQL Server query optimizer often means that views1 containing window functions do not perform as well as expected. This post works through an illustrative example of the problem, details the reasons, and provides a number of workarounds.

Note: The limitation described here was first fixed in SQL Server 2017 CU 30. Optimizer fixes must be enabled using trace flag 4199 or the database scoped configuration option. The fix is standard behaviour without optimizer hotfixes under compatibility level 160 (SQL Server 2022).

Friday, 8 March 2013

Execution Plan Analysis: The Mystery Work Table

I love SQL Server execution plans. It is often easy to spot the cause of a performance problem just by looking at one closely. That task is considerably easier if the plan includes run-time information (a so-called ‘actual’ execution plan), but even a compiled plan can be very useful.

Nevertheless, there are still times when the execution plan does not tell the whole story, and we need to think more deeply about query execution to really understand a problem. This post looks at one such example, based on a question I answered.

Thursday, 21 February 2013

Halloween Protection – The Complete Series

I have written a four-part series on the Halloween Problem.

Some of you will never have heard about this issue. Those that have might associate it only with T-SQL UPDATE queries. In fact, the Halloween Problem affects execution plans for INSERT, UPDATE, DELETE and MERGE statements.

This is a topic I have been meaning to write about properly for years, ever since I read Craig Freedman’s 2008 blog post on the topic, which ended with the cryptic comment:

“…although I’ve used update statements for all of the examples in this post, some insert and delete statements also require Halloween protection, but I’ll save that topic for a future post.”

That future post never materialized, so I thought I would have a go. The four parts of the series are summarized and linked below, I hope you find the material interesting.

Wednesday, 20 February 2013

The Halloween Problem – Part 4

The Halloween Problem can have a number of important effects on execution plans. In this final part of the series, we look at the tricks the optimizer can employ to avoid the Halloween Problem when compiling plans for queries that add, change or delete data.